What are dataflows?

Dataflows are a self-service, cloud-based technology designed to simplify the process of data preparation. They empower users to ingest, transform, and load data into various environments, including Microsoft Dataverse, Power BI workspaces, or your organization’s Azure Data Lake Storage account.

At their core, dataflows are built using Power Query, a powerful data connectivity and preparation tool that many are already familiar with from its use in Microsoft Excel and Power BI. This makes it easier for users to work with dataflows without needing to learn a new system from scratch.

One of the key advantages of dataflows is their flexibility. They can be triggered to run either on demand or automatically according to a set schedule, ensuring that your data is always up to date. This allows businesses to maintain a continuous flow of accurate and timely data, essential for making informed decisions.

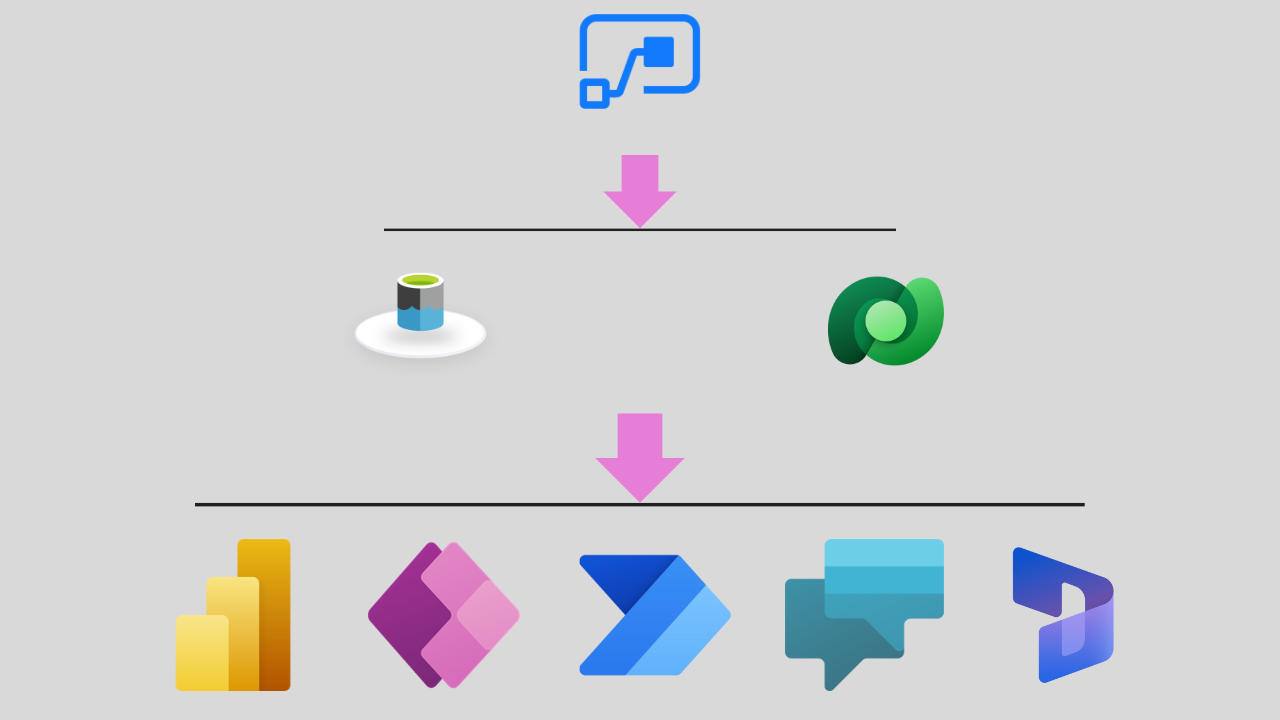

Dataflows Across Multiple Microsoft Products

One of the great advantages of dataflows is their versatility. Dataflows can be created and utilized within multiple Microsoft products, without the need for a separate, dataflow-specific license. They are available in Power Apps, Power BI, and Dynamics 365 Customer Insights, with the ability to create and run dataflows included as part of the licenses for these products.

While the core features of dataflows are consistent across these platforms, there are certain product-specific features that may vary depending on where the dataflow is created. This ensures that dataflows are tailored to fit the unique requirements of each product, enhancing their functionality and making them even more valuable for users.

Whether you’re working within Power Apps, Power BI, or Dynamics 365, dataflows provide a unified and efficient way to manage your data, streamlining your workflows and ensuring that your data preparation processes are integrated seamlessly with the Microsoft tools you already use.

The Dataflow Process: From Source to Destination

Dataflows streamline the journey of data from various origins to your chosen destinations within the Microsoft ecosystem. Here’s a breakdown of how this process unfolds:

-

Data Ingestion from Diverse Sources

Dataflows boast compatibility with over 80 different data sources, ranging from traditional databases and cloud services to files and web APIs. This extensive support ensures that you can gather data from virtually any platform you use, providing a comprehensive view of your information landscape.

-

Data Transformation with Power Query

Once the data is ingested, it’s transformed using the intuitive Power Query authoring experience. Power Query allows users to define a series of transformations—such as filtering, aggregating, and merging data—without the need for complex coding. These transformations are processed by the dataflow engine, ensuring that the data is cleansed, structured, and optimized for analysis.

-

Data Loading to Preferred Destinations

After the transformation phase, the refined data is loaded into your chosen output destination. This could be a Microsoft Power Platform environment, a Power BI workspace, or your organization’s Azure Data Lake Storage account. This flexibility allows teams to store and access data where it aligns best with their analytical and operational workflows.

By orchestrating these stages—ingestion, transformation, and loading—dataflows provide a robust framework for efficient data management, enabling organizations to harness the full potential of their data assets.

Dataflows: A Cloud-Based Solution

Dataflows operate entirely in the cloud, offering a seamless and scalable approach to data management. Here’s how their cloud-centric design works:

-

Cloud-Based Authoring and Storage

When you create and save a dataflow, its definition—essentially, the blueprint of the dataflow—is stored securely in the cloud. This cloud storage ensures that your dataflow is easily accessible, regardless of your location or device, and it also simplifies collaboration among team members.

-

Cloud-Driven Execution

The execution of dataflows also takes place in the cloud. When a dataflow run is triggered—whether manually or on a set schedule—the data transformation and computation are carried out entirely in the cloud. This means that the heavy lifting, such as processing large datasets and performing complex transformations, happens on cloud infrastructure, freeing up local resources and enhancing performance.

-

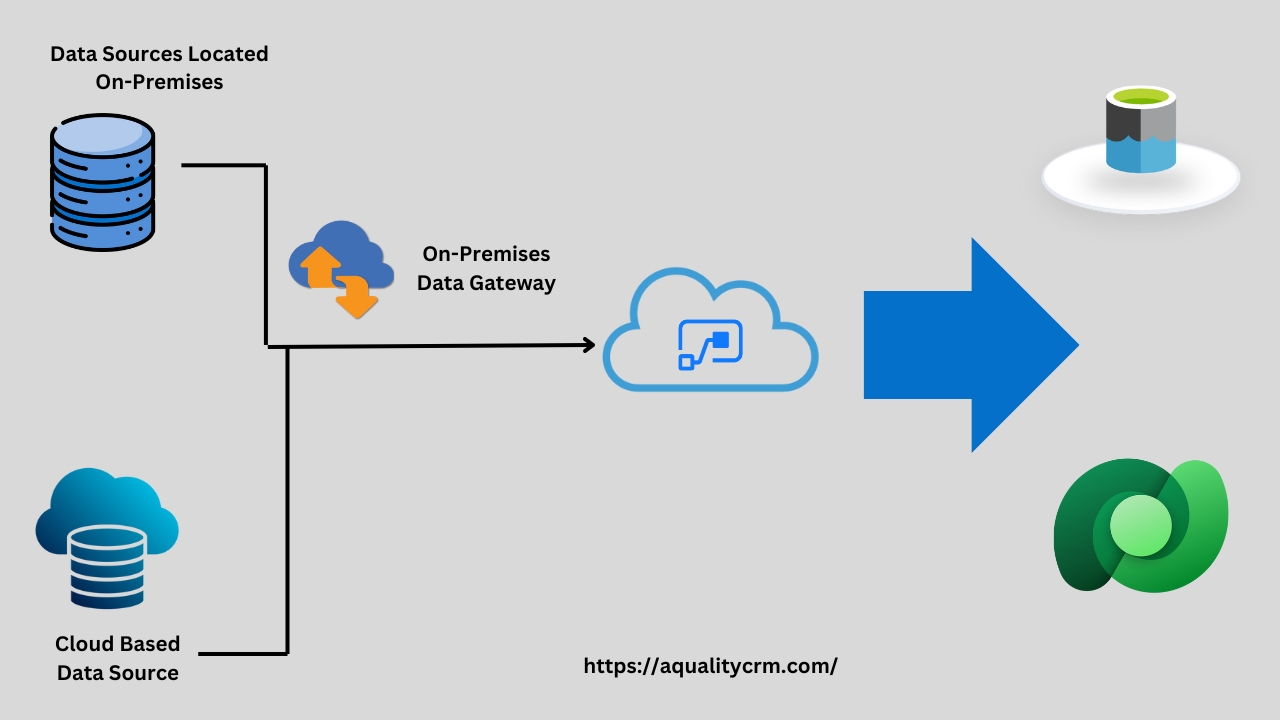

Handling On-Premises Data Sources

For organizations with on-premises data sources, dataflows can still be utilized without any issues. By using an on-premises data gateway, you can securely extract data from on-premises systems and move it to the cloud, where the dataflow can then process it. This gateway acts as a bridge, enabling seamless integration between on-premises data and cloud-based dataflows.

-

Cloud-Based Destinations

Once the dataflow has processed the data, it is always loaded into a cloud-based destination, such as a Microsoft Power Platform environment, a Power BI workspace, or Azure Data Lake Storage. This ensures that the data remains easily accessible, scalable, and ready for analysis or further use.

By leveraging the power and flexibility of the cloud, dataflows provide a robust and efficient way to manage and process data, whether your sources are cloud-based or on-premises.

The Power Behind Dataflows: Advanced Transformations with Power Query

At the heart of dataflows is the Power Query transformation engine, a robust tool designed to handle complex data transformations with ease. Here’s how this powerful engine enhances the dataflow experience:

-

Advanced Transformation Capabilities

Power Query is a versatile transformation engine capable of supporting a wide range of advanced data manipulations. Whether you need to perform complex calculations, filter and aggregate large datasets, or merge data from multiple sources, Power Query has the tools to make it happen. Its powerful engine ensures that even the most sophisticated transformations are processed efficiently.

-

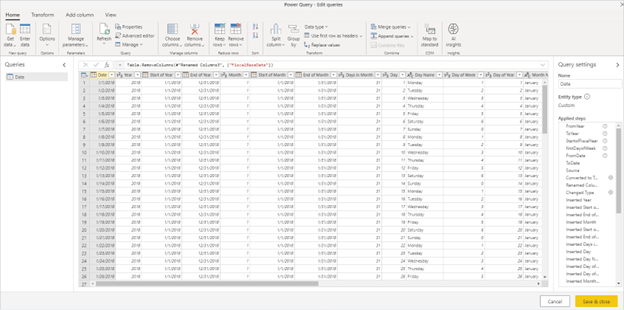

User-Friendly Interface with Power Query Editor

Despite its advanced capabilities, Power Query remains accessible thanks to its Power Query Editor, a graphical user interface that simplifies the data transformation process. The editor is designed to be intuitive, allowing users to create and configure data transformations without needing deep technical expertise. You can visually build your data integration solutions, making the process faster and more straightforward.

-

Rapid Development of Data Integration Solutions

With the combination of a powerful transformation engine and a user-friendly interface, dataflows enable you to develop your data integration solutions more quickly and with less effort. Power Query Editor provides a drag-and-drop experience, where users can see their transformations in real-time, adjust them as needed, and ensure that the final data is exactly what’s required for downstream applications.

By leveraging the Power Query transformation engine, dataflows empower users to handle complex data tasks with ease, speeding up the development process while maintaining the flexibility to perform intricate transformations. This makes dataflows a valuable tool for any organization looking to streamline their data integration processes.

Seamless Integration with Microsoft Power Platform and Dynamics 365

One of the standout features of dataflows is their deep integration with the Microsoft Power Platform and Dynamics 365, making it easier to leverage the data they produce across various applications and services. Here’s how this integration works:

-

Cloud-Based Data Storage

When a dataflow processes data, it stores the resulting tables in cloud-based storage. This cloud-centric approach not only ensures that your data is easily accessible but also enables seamless interaction with other services within the Microsoft ecosystem.

-

Versatile Connectivity Options

Depending on the destination you configure during dataflow creation, the data produced by a dataflow can be accessed through several means:

-

- Dataverse: As a central data repository, Dataverse allows applications like Power BI, Power Apps, and Dynamics 365 to easily connect to and utilize the data generated by dataflows.

- Power Platform Dataflow Connector: This connector provides a direct link between the dataflow output and other Power Platform services, enabling smooth data integration across different tools.

- Direct Access via Azure Data Lake Storage: If the dataflow’s destination is Azure Data Lake Storage, other services can directly access and interact with the data stored there, offering even greater flexibility for large-scale data solutions.

- Integration with Microsoft Services

The integration possibilities are extensive:

-

- Power BI: Use dataflows to prepare and clean your data before visualizing it in Power BI dashboards and reports.

- Power Apps: Leverage dataflows to feed data into custom apps built on Power Apps, ensuring that your applications are always powered by the most accurate and up-to-date information.

- Power Automate: Automate workflows that respond to changes in dataflow outputs, driving efficiency across your business processes.

- Power Virtual Agents: Feed data from dataflows into chatbots created with Power Virtual Agents, providing users with intelligent, data-driven responses.

- Dynamics 365: Integrate dataflows directly with Dynamics 365 applications to enhance CRM, ERP, and other business functions with clean, prepared data.

By storing data in the cloud and offering multiple ways to access and interact with it, dataflows enable a high level of integration across the Microsoft Power Platform and Dynamics 365. This ensures that your data is not just stored but actively utilized across the tools that drive your business.

Benefits of Using Dataflows

Dataflows offer a range of benefits that make them a powerful tool for managing data across various Microsoft products. Here are some key advantages:

-

Separation of Data Transformation from Modeling and Visualization

Dataflows decouple the data transformation layer from the modeling and visualization layers in Power BI solutions. This separation allows for greater flexibility and cleaner architecture, as the transformation logic is centralized rather than scattered across different reports and models.

-

Centralized Data Transformation Code

Instead of having transformation code spread across multiple artifacts, a dataflow allows this code to reside in a single, central location. This centralization simplifies maintenance, makes it easier to update or modify the transformation logic, and ensures consistency across the organization.

-

Simplified Skill Requirements

Creating a dataflow requires only Power Query skills. This means that in environments with multiple creators, the dataflow creator can be part of a collaborative team, each member contributing to the overall BI solution or operational application. This reduces the need for specialized skills and makes dataflows more accessible to a broader range of users.

-

Product-Agnostic Flexibility

Dataflows are not limited to Power BI. They are product-agnostic, meaning the data they produce can be accessed by other tools and services within the Microsoft ecosystem. This flexibility enhances their value and applicability across different platforms and use cases.

-

Self-Service Data Transformation with Power Query

Dataflows leverage Power Query, offering a graphical, self-service data transformation experience that is both powerful and easy to use. This allows users to perform advanced data transformations without needing a deep technical background.

-

Cloud-Based Operation

Running entirely in the cloud, dataflows require no additional infrastructure. This makes them easy to deploy and scale, reducing the need for on-premises resources and IT support.

-

Multiple Entry Points

You can start working with dataflows using various licenses, including those for Power Apps, Power BI, and Customer Insights. This flexibility makes it easier for organizations to integrate dataflows into their existing workflows.

-

Designed for Self-Service Scenarios

Although capable of handling advanced transformations, dataflows are designed for self-service scenarios. They are accessible to users without an IT or developer background, enabling more team members to contribute to data preparation and integration tasks.

Use-Case Scenarios for Dataflows

Dataflows are versatile tools that can be applied in various scenarios, streamlining data management and transformation across different platforms and business needs. Here are some common use cases for dataflows:

-

Data Migration from Legacy Systems

When an organization decides to transition from a legacy on-premises system to a modern interface like Power Apps, dataflows can facilitate the migration process. Power Apps, Power Automate, and AI Builder all rely on Dataverse for data storage. By using dataflows, the organization can efficiently migrate existing data from the on-premises system into Dataverse. Once the data is in Dataverse, it can be immediately utilized by these modern applications, ensuring a smooth transition and minimal disruption to operations.

-

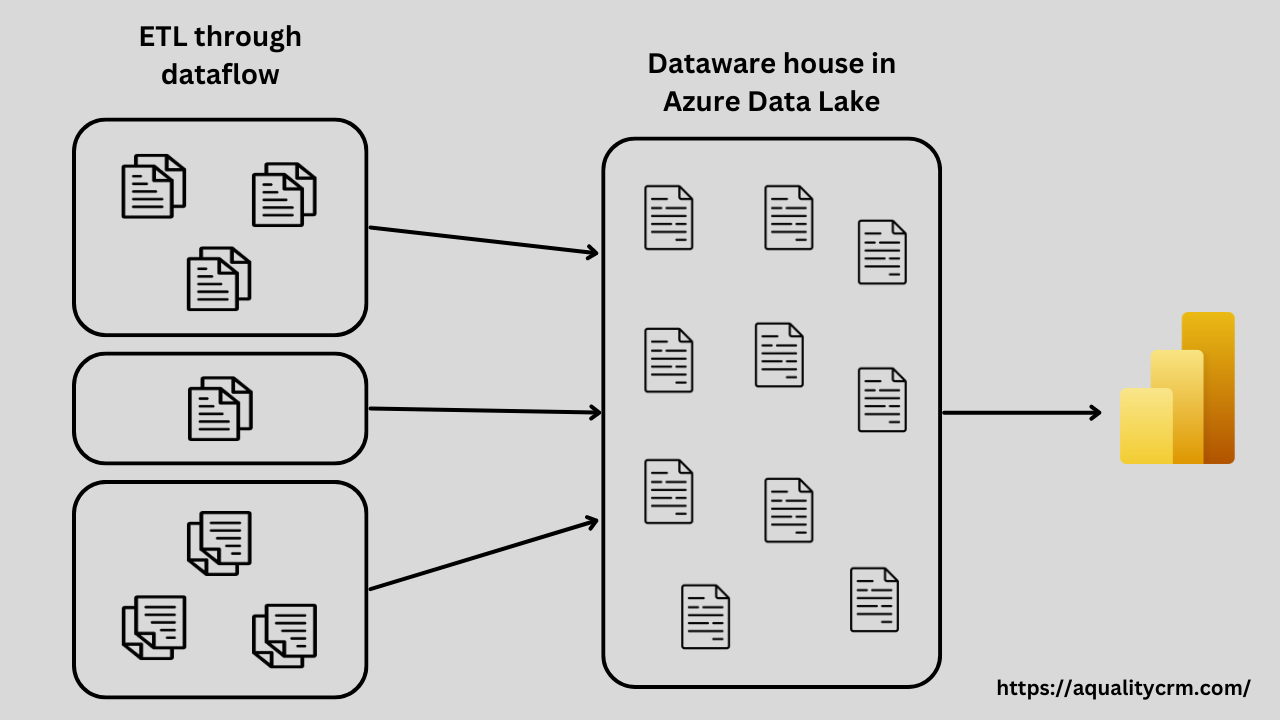

Building a Data Warehouse with Dataflows

Dataflows can serve as a replacement for traditional extract, transform, load (ETL) tools in constructing a data warehouse. In this scenario, a company’s data engineers decide to use dataflows to design their star-schema data warehouse, which includes both fact and dimension tables stored in Azure Data Lake Storage. Power BI can then be used to generate reports and dashboards by accessing the data from these dataflows. This approach simplifies the data warehousing process, reduces dependency on complex ETL tools, and leverages the cloud-based capabilities of dataflows for efficient data management.

-

Creating a Dimensional Model with Dataflows

Similar to building a data warehouse, dataflows can also be used to create a dimensional model, replacing traditional ETL tools. In this use case, data engineers design a star-schema dimensional model with fact and dimension tables stored in Azure Data Lake Storage Gen2. Power BI is then used to create reports and dashboards based on the data from these dataflows. This approach ensures that the data model is optimized for analytical queries, enhancing performance and scalability while reducing the complexity of managing multiple data sources.

-

Centralizing Data Preparation and Reusing Semantic Models Across Power BI Solutions

In environments where multiple Power BI solutions require the same transformed version of a table, dataflows can significantly improve efficiency. Without dataflows, the process of creating the table would be repeated across each solution, increasing the load on the source system, consuming more resources, and creating duplicate data with multiple points of failure. By using a single dataflow to compute the data for all solutions, Power BI can reuse the results across different reports and dashboards. This approach reduces the risk of errors, lowers maintenance costs, and ensures consistency across the organization’s data assets. Dataflows thus become a central component of a robust Power BI architecture, minimizing code duplication and enhancing the overall manageability of the data integration layer.

These scenarios highlight the flexibility and power of dataflows in various business contexts, from migrating legacy data to building complex data models and optimizing Power BI implementations. By integrating dataflows into your data strategy, you can streamline processes, reduce costs, and ensure that your data is always ready for analysis and decision-making.